Compositional Learning for Robot Autonomy via Modularity and Abstraction

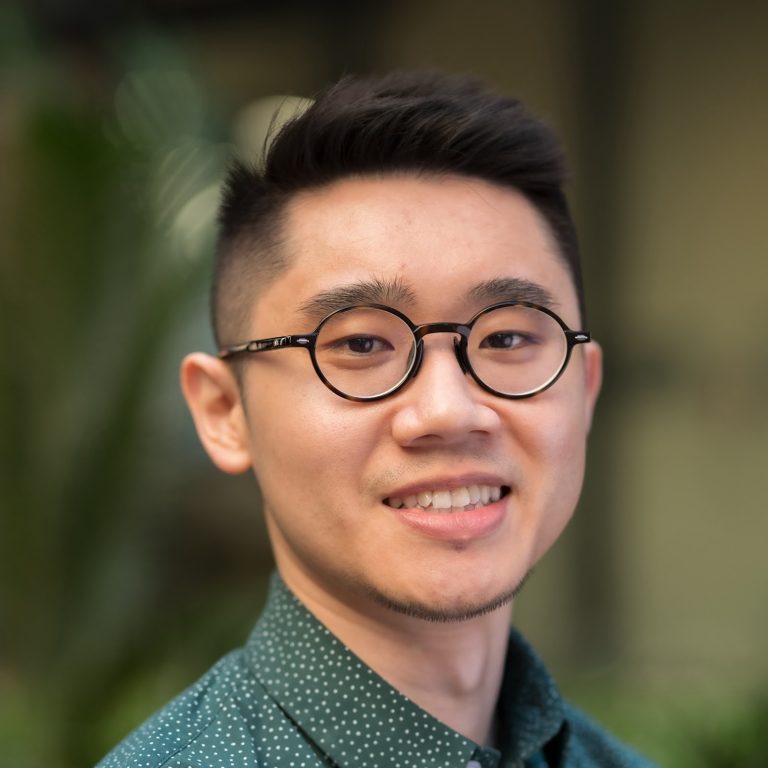

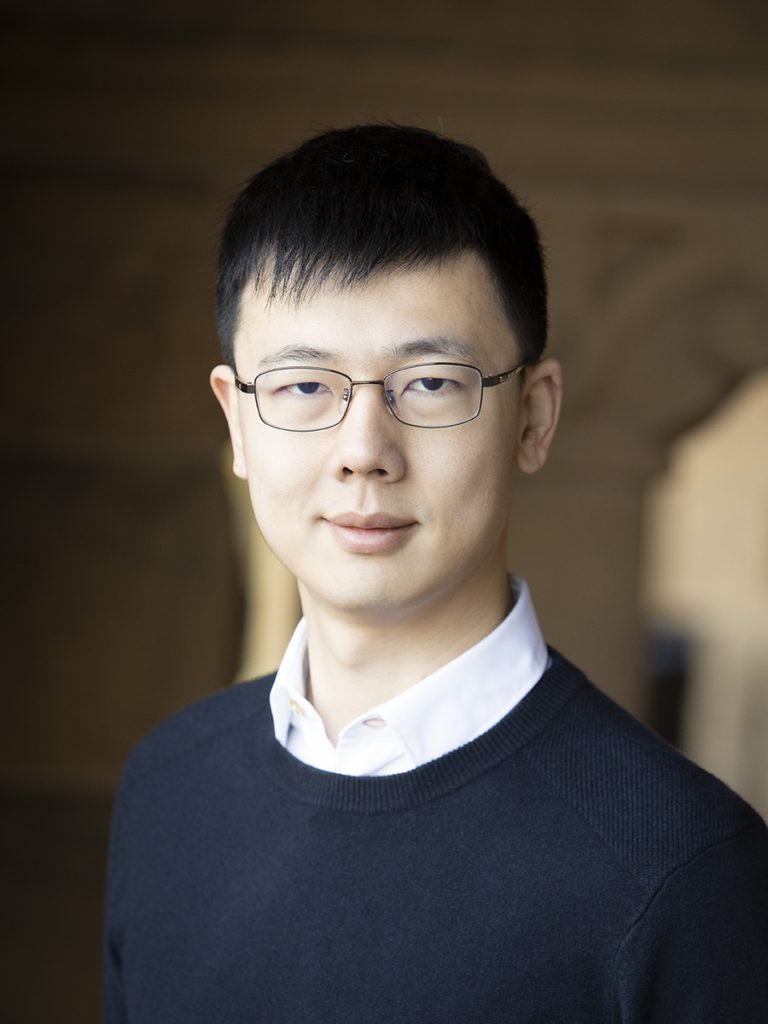

Yuke Zhu is an Assistant Professor in the Department of Computer Science at UT-Austin and the director of the Robot Perception and Learning Lab. He received his Master’s and Ph.D. degrees from Stanford. His research lies at the intersection of robotics, machine learning, and computer vision. He builds computational methods of perception and control that give rise to intelligent robot behaviors.

Building robot intelligence for long-term autonomy demands robust perception and decision-making algorithms at scale. Recent advances in deep learning have achieved impressive results on end-to-end learning of robot behaviors from pixels to torques. However, the prohibitive costs of training for sophisticated behaviors have told us: “There is no ladder to the moon.” I argue that the functional decomposition of the pixels-to-torques problem via modularity and abstraction is the key to scaling up robot learning methods. In this talk, I will present our recent work on compositional modeling of robot autonomy. I will discuss our algorithms for developing state and action abstractions from raw signals. With these abstractions, I will introduce our work on neuro-symbolic planners that achieve compositional generalization in long-horizon manipulation tasks.