Perceive Before Learning to Plan or Act: Towards End-to-End Perception for An Embodied Agent in Human Crowded Environments

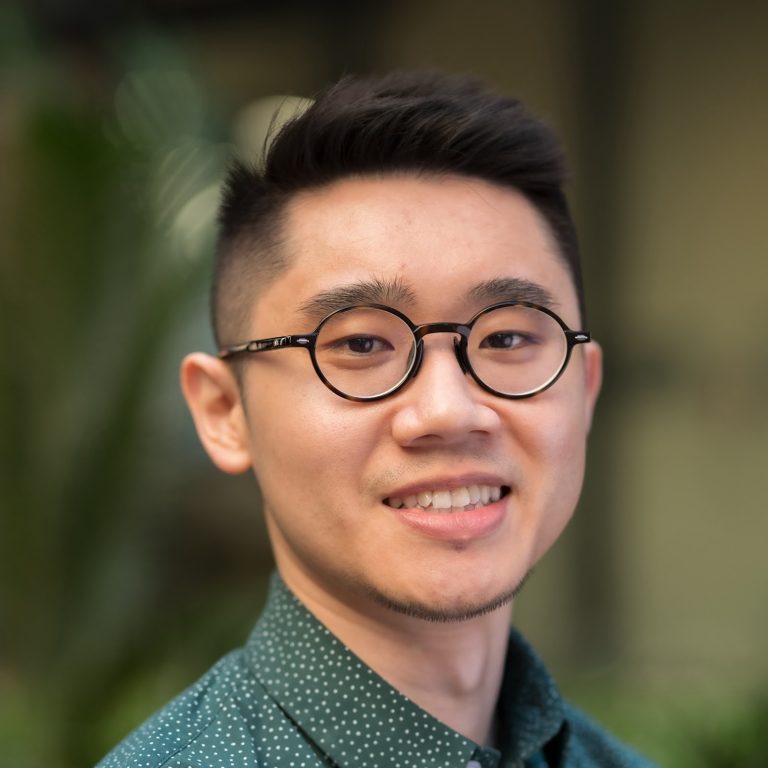

Hamid Rezatofighi is a lecturer at the Faculty of Information Technology, Monash University, Australia. Before that, he was an Endeavour Research Fellow at the Stanford Vision Lab (SVL), Stanford University, and a Senior Research Fellow at the Australian Institute for Machine Learning (AIML), the University of Adelaide. He received his PhD from the Australian National University in 2015. He has published over 50 top tier papers in computer vision, AI and machine learning, robotics, medical imaging and signal processing, and has been awarded several grants including the recent ARC discovery 2020 grant. He served as the publication chair in ACCV18 and as the area chair in CVPR20, WACV21 and CVPR2022. His research interest includes visual perception tasks, esp. those that are required for an autonomous robot to navigate in a human environment, such as object/person detection, multiple object/people tracking, trajectory forecasting, social activity and human pose prediction and autonomous social robot planning. He has also research expertise in Bayesian filtering, estimation and learning using point process and finite set statistics.

To operate, interact and navigate safely in dynamic human environments, an embodied agent, e.g. a mobile social-robot must be equipped with a reliable perception system, which is not only able to understand the static environment around it, but also perceive and predict intricate human behaviours in this environment while considering their physical and social decorum and interactions. To this end, this perception system needs to be a multitask model, i.e. including different levels, from basic-level perception modules, such as detection, tracking and object/human pose estimation, object/human reconstruction to high-level perception problems such as reasoning about human-to-human (social) and human-to-scene context (physical) interactions. All these modules also should be end-to-end trainable, making the deployment of this system in the loop of perception and planning feasible. The overarching aim of my and my team’s research is to develop such a perception system. In this talk, I will briefly present some of my team’s previous and recent works, targeting this ambitious goal. I will elaborate on the details of three recent works on our end-to-end multi-object tracking framework, human motion forecasting and human social grouping and activity. I will also introduce JRDB, a unique and different dataset and benchmark created to be used for the training and evaluation of such an end-to-end perception system.