Learning to Vary 3D Models for Universally Accessible 3D Content Creation

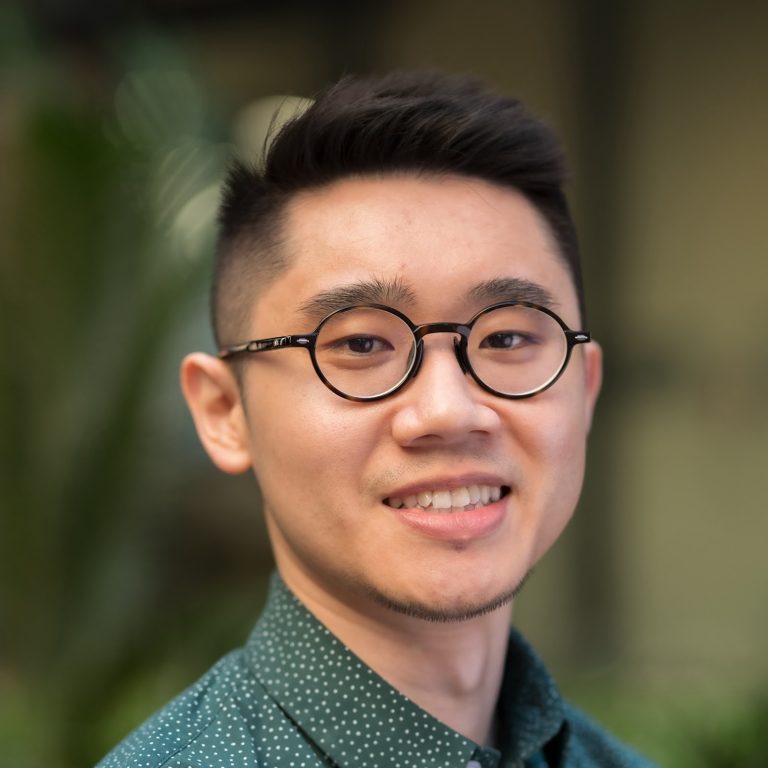

Mikaela Angelina Uy is a third-year CS Ph.D. student at Stanford University advised by Leonidas Guibas. Her research focuses on 3D shape deformations and variation generation, shape analysis, and geometry processing, with the goal to make 3D content creation universally accessible and useful. She received her Bachelor’s degree double majoring in Mathematics and Computer Science from the Hong Kong University of Science and Technology in 2017, and her Master’s in Computing from the National University of Singapore in 2018. She is also currently a research intern at Google, and has previously interned at Adobe Research and Autodesk Research.

Digitizing the physical world requires 3D content creation that is akin to that of real objects and environments. However, creating and designing high quality 3D models is a tedious and difficult process even for expert designers. My goal is to be able to automate 3D content creation to make it universally accessible to all user types, resulting in shapes that rival the fidelity, level-of-detail and overall quality of artist generated models. We can leverage on existing high-quality 3D repositories and instead learn to generate new shapes through deformations/variations of these existing models, resulting in wide arrays of completely new content. I will first talk about a target-centric approach that is to retrieve-and-deform existing models from CAD databases in order to fit a given target image or scan. Our approach is able to achieve output shapes that not only matches the desired target, but is also able to retain the original quality and fine details of a CAD model. Finally, I will show our initial efforts to achieve user interaction and control for shape deformation, by proposing an approach that predicts source-dependent deformation handles that allow for user controllable and expressive shape variation.