Hao Phung, Quan Dao, Anh Tran

Spoken language understanding (SLU) is a crucial component of task-oriented dialogue systems, which typically handles natural language understanding tasks including intent detection and slot filling. In particular, intent detection aims to identify a speaker’s intent from a given utterance, while slot filling is to extract from the utterance the correct argument value for the slots of the intent. Despite being the 17th most spoken language in the world (about 100M speakers), data resources for Vietnamese SLU are limited. To the best of our knowledge, there is no public Vietnamese dataset available specifically for either intent detection or slot filling.

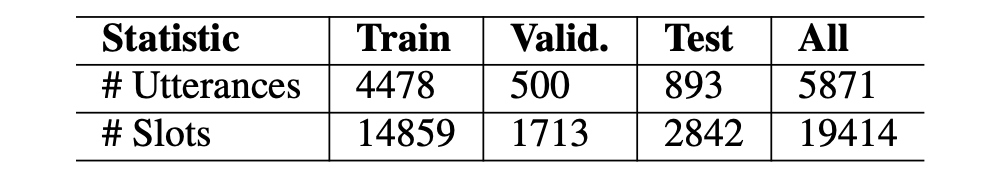

Our dataset construction process includes three manual phases. The first phase is to create a raw natural Vietnamese utterance set that is translated based on the ATIS dataset, a popular benchmark for intent detection and slot filling in the flight travel domain. The second phase is to project intent and slot annotations from ATIS to its Vietnamese-translated version. The last one is to fix inconsistencies among projected annotations.

Note that during the translation phase, we require adaptive modifications to make the translated utterances reflect real-world scenarios in the context of airline booking in Vietnam. We also require to preserve spoken modalities (e.g. disfluency, word repetition and collocation) as much as possible to obtain a translated dataset that is correct, natural and similar to the real-world scenarios in Vietnam.

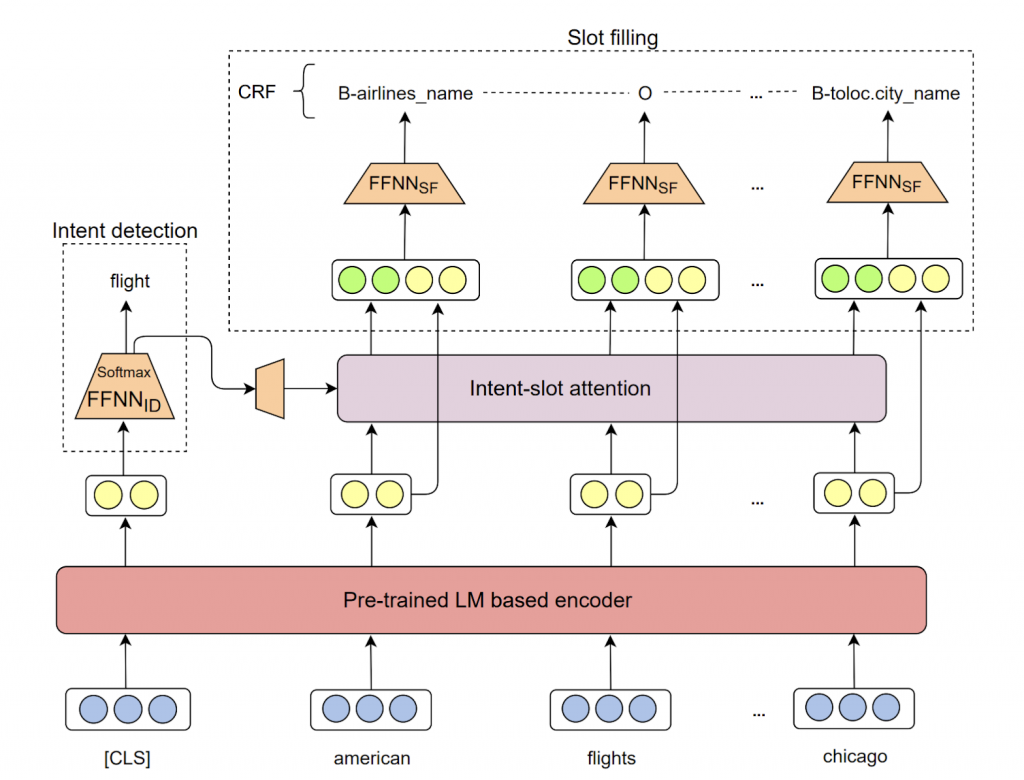

Figure 1 illustrates the architecture of our joint model—named JointIDSF—that consists of four layers including: an encoding layer (i.e. encoder) to encode input utterance into contextualized representation; an intermediate intent-slot attention layer to explicitly capture the relationship between intent and slot information; and two decoding layers of intent detection and slot filling to output the corresponding slots and intents labels.

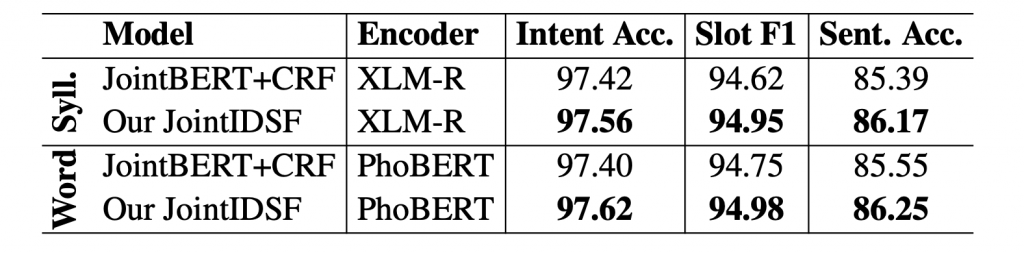

Our JointIDSF can be viewed as an extension of the recent state-of-the-art JointBERT+CRF model, where we introduce the intent-slot attention layer to explicitly incorporate intent context information into slot filling.

We conduct experiments on our dataset to study:

We find that:

Intent detection and slot filling are important tasks in spoken and natural language understanding. However, Vietnamese is a low-resource language in these research topics. In this paper, we present the first public intent detection and slot filling dataset for Vietnamese. In addition, we also propose a joint model for intent detection and slot filling, that extends the recent state-of-the-art JointBERT+CRF model with an intent-slot attention layer to explicitly incorporate intent context information into slot filling via “soft” intent label embedding. Experimental results on our Vietnamese dataset show that our proposed model significantly outperforms JointBERT+CRF.

Read the paper: https://arxiv.org/abs/2104.02021 PhoATIS and JointIDSF are publicly released at: https://github.com/VinAIResearch/JointIDSF

Overall

Mai Hoang Dao, Thinh Hung Truong, Dat Quoc Nguyen

Share Article