Effective and Robust AI for Dynamic Legged Robots

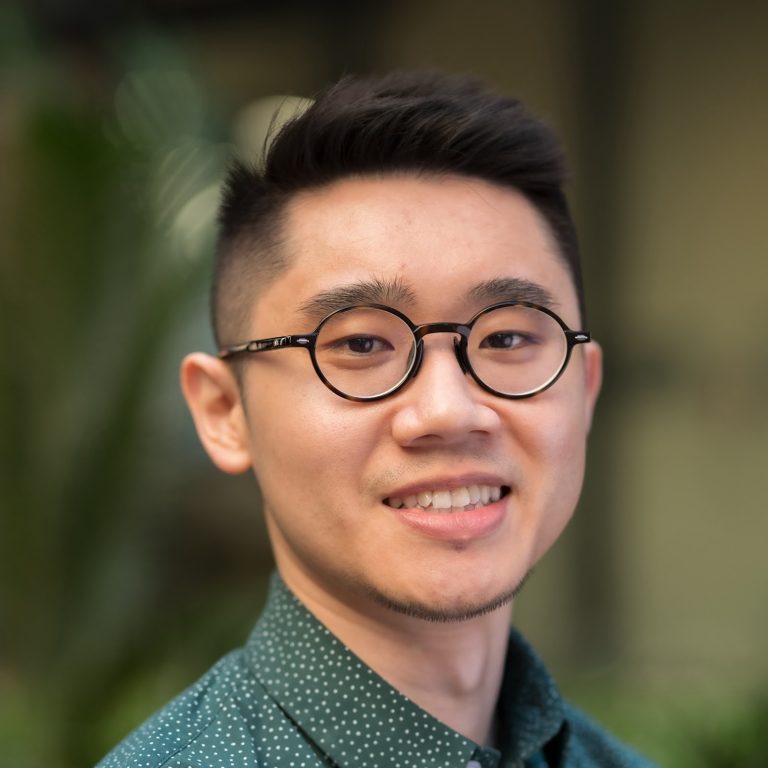

Quan Nguyen is an Assistant Professor of Aerospace and Mechanical Engineering at the University of Southern California. Prior to joining USC, he was a Postdoctoral Associate in the Biomimetic Robotics Lab at the Massachusetts Institute of Technology (MIT). He received his Ph.D. from Carnegie Mellon University (CMU) in 2017 with the Best Dissertation Award.

His research interests span different control and optimization approaches for highly dynamic robotics including nonlinear control, trajectory optimization, real-time optimization-based control, robust and adaptive control. His work on the bipedal robot ATRIAS walking on stepping stones was featured on the IEEE Spectrum, TechCrunch, TechXplore and Digital Trends. His work on the MIT Cheetah 3 robot leaping on a desk was featured widely in many major media channels, including CNN, BBC, NBC, ABC, etc. Nguyen won the Best Presentation of the Session at the 2016 American Control Conference (ACC) and the Best System Paper Finalist at the 2017 Robotics: Science & Systems conference (RSS). Nguyen is a recipient of the 2020 Charles Lee Powell Foundation Faculty Research Award.

The mobility of man-made machines is still limited to relatively flat grounds, whereas humans and animals can traverse almost all surfaces of the earth including rocky cliffs or collapsed buildings. In this talk, I will pose the question “How can we make robots with similar morphologies achieve such extremely agile and robust behaviors?” Enabling robots to exhibit such behaviors will one day facilitate robotic space exploration, disaster response, construction, etc. Furthermore, such time and safety critical missions also require robots to operate swiftly and stably while dealing with high levels of uncertainty and large external disturbances. The talk will discuss our research on effective and robust AI that allows legged robots to learn aggressive locomotion skills as fast running, high jumping on rough terrains while taking into account substantial model uncertainties from robot dynamics. Our approach lies in the intersection of optimal control, trajectory optimization and deep reinforcement learning.