Image Gradients for Understanding and Improving Neural Networks

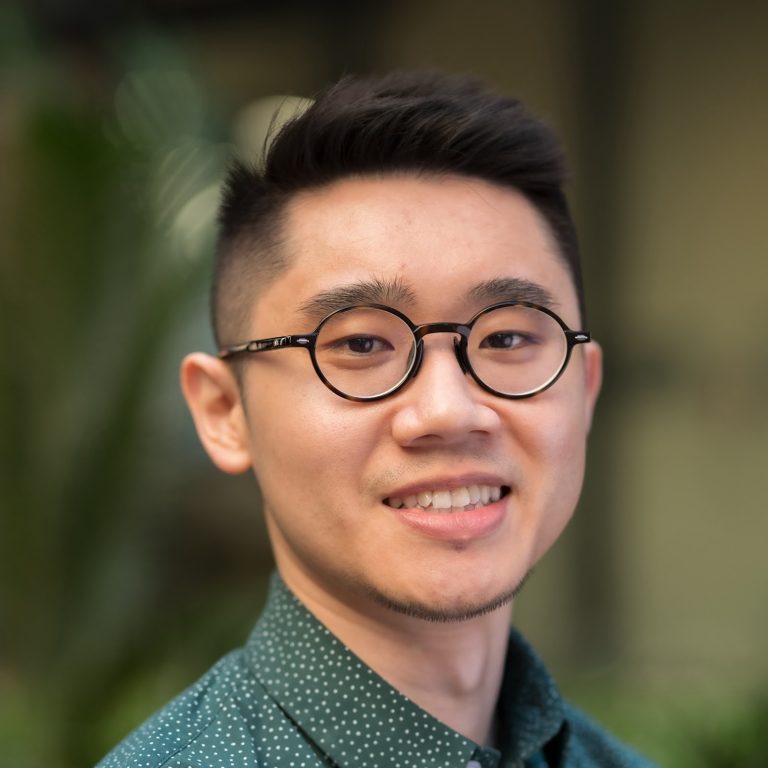

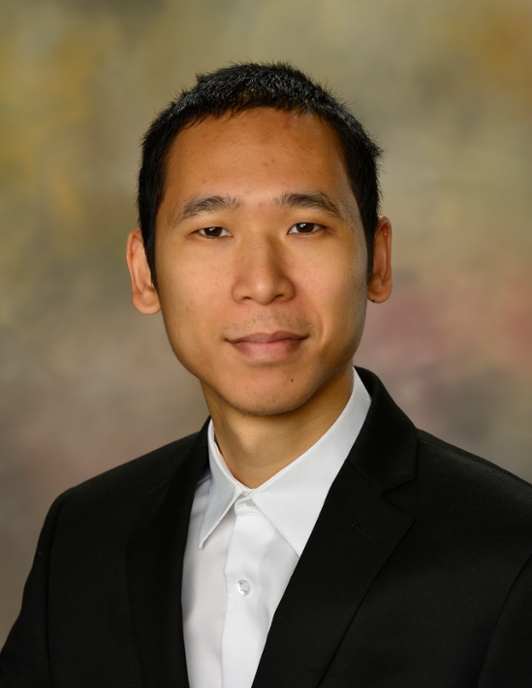

Anh Nguyen completed his Ph.D. working with Jeff Clune and Jason Yosinski in 2017 and since then has been an Assistant Professor at Auburn University. He also worked at Apple and Geometric Intelligence (acquired by Uber). In a previous life, Anh enjoyed building web interfaces at Bosch and invented a 3D input device for virtual reality (covered on MIT Tech Review). Anh is interested in making AIs more robust, explainable, and understanding their inner-workings. Anh’s research has won 3 Best Paper Awards at CVPR 2015, GECCO 2016, ICML 2016 Visualization workshop, a Best Application Paper Honorable Mention at ACCV 2020, and 2 Best Research Video Awards at IJCAI 2015 & AAAI 2016. His work has been covered by many media outlets e.g. MIT Technology Review, Nature, Scientific American, and lectures at various institutions.

Machine Learning practitioners often care about the gradients of their neural networks with regards to network weights. However, the image gradient, i.e. the gradient of an image classification network w.r.t. its input is also a powerful, indispensable tool in our ML toolbox. In this talk, we will discuss how a simple image gradient can be used to do so many cool tasks: synthesizing adversarial examples, visualizing neurons, training more robust networks, interpreting a classifier’s decisions, and even training better unconditional image generators.