Supervised Quantile Normalization for Matrix Factorization using Optimal Transport

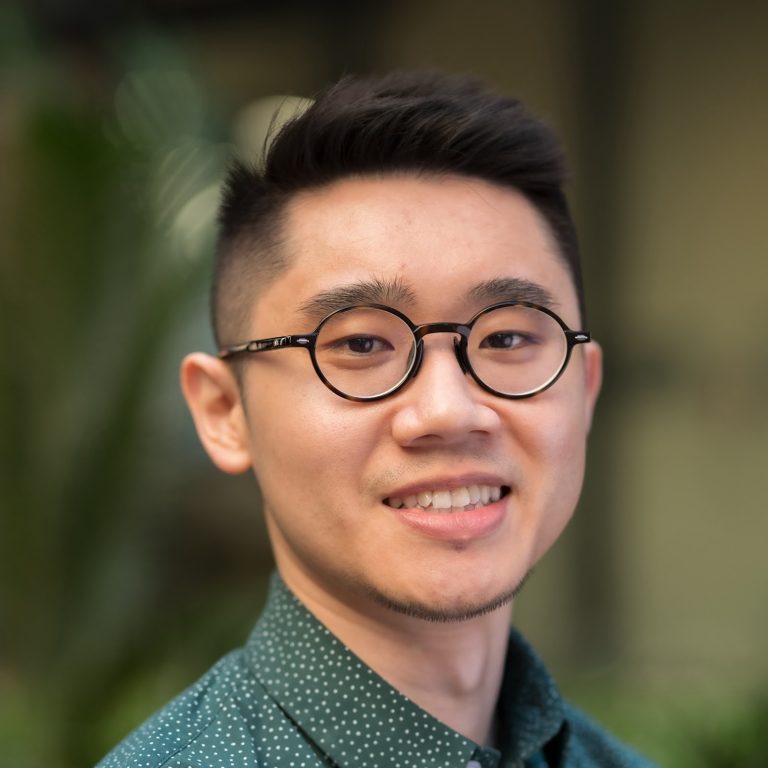

Marco Cuturi joined Google Brain, in Paris, in October 2018. He graduated from ENSAE (2001), ENS Cachan (Master MVA, 2002) and holds a PhD in applied maths obtained in 2005 at Ecole des Mines de Paris. He worked as a post-doctoral researcher at the Institute of Statistical Mathematics, Tokyo, between 11/2005 and 03/2007. He worked in the financial industry between 04/2007 and 09/2008. After working at the ORFE department of Princeton University between 02/2009 and 08/2010 as a lecturer, he was at the Graduate School of Informatics of Kyoto University between 09/2010 and 09/2016 as a tenured associate professor. He then joined ENSAE, the french national school for statistics and economics, in 9/2016, where he still teaches. His recent proposal to solve optimal transport using an entropic regularization has re-ignited interest in optimal transport and Wasserstein distances in the machine learning community. His work has recently focused on applying that loss function to problems involving probability distributions, e.g. topic models / dictionary learning for text and images, parametric inference for generative models, regression with a Wasserstein loss and probabilistic embeddings for words.

We present in this recent work (https://arxiv.org/pdf/2002.03229.pdf) a recent application of our framework to carry out “soft” sorting and ranking using regularized optimal transport. We expand this framework to include “soft” quantile normalization operators that can be differentiated efficiently, and apply it to the problem of dimensionality reduction: we ask how features can be normalized with a target distribution of quantiles to recover “easy to factorize” matrices. We provide algorithms to do this, as well as empirical evidence of recovery, and useful applications to genomics.